CXO Guide for Generative AI Governance + Responsible Use

Executive Summary Up Front – Generative AI Basics

- Generative AI (GenAI) is creating a paradigm shift in how information is consumed as well as produced thereby changing how businesses will be organized from this point forward

- Accessibility, Versatility, and Productivity are the three primary drivers of explosive GenAI adoption

- GenAI has created two broad categories of risk: First, Model Risk which refers to the risk of errors, biases, bad data, harmful output, and loss of regulatory compliance. Second, Usage Risk which refers to the risk of inappropriate usage, loss of data, loss pf privacy, loss of intellectual property, unmanaged productivity, and overall loss of GenAI Visibility

- GenAI should be embraced as long as enterprises follow Responsible Usage as well as Responsible Creation processes. Responsible Creation includes two aspects – ensuring algos are transparent and bias-free, and ensuring training data is clean and compliant

- Portal26’s GenAI Governance Suite addresses two of the three responsible AI requirements Portal26 Trail, a GenAI Governance and

AI TRiSM Platform that supports Responsible Usage of GenAI across the enterprise, and Portal26 AI Vault, that supports Responsible Creation of GenAI via clean training data pipelines.

Here at Portal26, we have been busy adding capabilities that help us meet the exciting new opportunities presented by the Generative AI movement while still supporting the rich data security use cases we have been offering for the last few years.

This blog is focused on Portal26’s Generative AI (GenAI) governance offering. We will be touching on both responsible use of GenAI as well as responsible GenAI creation. As always, we will make this free of jargon and focus on easy practical approaches that CXOs can use to get GenAI Governance and Responsible Use in place today.

But, before we dive in, let’s explore the three strategies for GenAi Governance…

Three Key Elements of CXO GenAI Governance + Responsible Use Strategy

Determine if you are going to be building your own GenAI models (LLMs). If so, consider a strategy focused on Responsible Creation both in terms of algos as well as data. Determine if you are going to allow the use of GenAI in your organization (not sure you can stop it in any case). If so, focus on Responsible Usage.

- Responsible GenAI Usage – Ensure that you know how GenAI is being used, implement and enforce (usage, security, privacy, and compliance) guardrails and policy (Currently offered by Portal26: Portal26 Trail: GenAI Governance Platform)

- Responsible GenAI Creation: Data – Ensure that GenAI models can be trained on data that has been vetted against data privacy regulations. (e.g. Portal26 AI Vault: Clean AI Training Data)

- Responsible GenAI Creation: Algo – Ensure that GenAI algorithms minimize inherent bias, errors, and harmful output. (not offered by Portal26)

What is Generative AI and why is such a big deal?

Generative AI or GenAI, is a type of Artificial Intelligence that can translate conversational requests into actionable instructions which can be executed by a software program to synthesize responses based on a body of previously supplied information.

How does generative AI work? Well, in other words, you can ask it a question in natural language, and it will respond in the same conversational way, based on data fed to it earlier. Generative AI is not limited to text and conversational inputs and can also be utilized to synthesize images, audio, video etc.

The underlying models are often referred to as LLMs (which stands for Large Language Models). Some common GenAI tools are ChatGPT, Bard, Anthropic, etc. There are thousands of GenAI models out there with hundreds of new models being created each week.

3 Reasons For Generative AI’s Explosion

GenAI is a huge deal because of the following reasons:

- Accessibility: As the natural language capabilities of GenAI continue to improve, the skill required to utilize it becomes less and less of an obstacle. As a result, anybody that can frame a question can use GenAI to support their project. This is the single largest driver of why GenAI is a massively huge deal. You no longer need to tech savvy or trained in any way. As a result we have seen the GenAI adoption curve look steeper than anything before it. All over the world we are seeing an explosion in personal as well as business use of GenAI. This is not going to slow down anytime soon – so if you have not already hopped on to the GenAI train, it is time to buy your ticket!

- Versatility: Ever wondered “is ChatGPT is generative AI?” Well, the initial GenAI explosion was set off by ChatGPT, which originally offered text-based content! Since then, GenAI has advanced to include a variety of content types including text, images, audio and video. In addition to versatility in this dimension, there is also tremendous versatility in terms of the content domain itself. GenAI models can be created for specific domains such as healthcare, finance, or even company specific domains that analyze and synthesize content based on data belonging to the particular organization and their business. With training data volume requirements reducing over time and model accuracy increasing over time, we will be seeing GenAI models become an inherent part of how business is done in the future.

- Productivity: Creating content using GenAI is extremely fast. If you have tried ChatGPT, Bard, or other common AIs, you will notice that within a few moments of asking a question, you have in your hands, a reasonably well formulated response. Now, we must note that like any other piece of software we always run the risk of garbage in garbage out – so it is possible and quite likely in these early days, that everything the AI tells you will not be factually accurate. Keep that in mind. However, as far as speed of content generation, AI will always be faster than the human by an order of magnitude. So, for the right kind of content, especially mundane and non-sensitive domains, the combination of GenAI + human review can be a very powerful and productive combination. The productivity gains are so massive that they are driving enterprise adoption of GenAI far and fast. They will also be the reason that organizations will continue to invest in improving the quality of GenAI. As error rates go down, adoption and resulting productivity will continue to skyrocket.

Generative AI Risks – Why are CXOs so worried about GenAI?

While tremendously powerful, GenAI immediately brings up unprecedented questions. For example:

- What happens if models are trained on bad data?

- What if sensitive data ends up in models and subsequently finds its way out to users of the AI?

- What if the underlying algorithms are not inherently fair?

- What if training data is not accurate or current?

- What if data owners change their minds? Can we ever make models un-learn?

- Who is responsible if users receive harmful responses?

- Does merely using GenAI break certain types of regulatory compliance?

- What if GenAI is used by bad actors?

- How can we distinguish human created content from GenAI created content?

- Should we allow employees to use GenAI?

- If we don’t allow it, will we be left behind?

- How can security teams manage GenAI based applications?

- And so on… the list is endless – but from reading the few questions above, you should already be able to come up with a number of additional concerns that apply to your own organization

What is the connection between GenAI and Responsible Use?

With all the chatter about GenAI all around us, you have likely heard the term Responsible Use used in connection with GenAI. Let’s take a quick look at what this is about.

The age old saying “With great power comes great responsibility” is very applicable in the case of GenAI.

If anybody, skilled or unskilled, knowledgeable or uninformed, adult or minor, can ask a question of a GenAI model, it becomes extremely important that they requester not be fed with a response that is wrong, harmful, illegal, inappropriate or otherwise destructive. This is a direct, well understood and somewhat obvious risk.

If you peel things back a bit more you will realize that there are a host of additional risks when it comes to the use of GenAI.

Let’s break these risks up into two broad categories:

1. Responsible Creation

Responsible GenAI creation is concerned with how GenAI models are created and include both AI algorithms as well as AI training data. Responsible creation would ensure that algorithms are inherently free of bias and errors. Responsible creation also ensures that the data used to train the AI does not cause security, privacy, compliance, and intellectual properly related challenges. Whether these are issues or not are a function of whether the GenAI in question is private and for internal use only, or if it would be accessible by external parties. It would also depend on whether training data is owned by the organization or ultimately belonging to end users who are entitled to protection under data privacy regulations etc.

2. Responsible Usage

Responsible Usage of GenAI is concerned with how GenAI is utilized. It would ensure that the use of GenAI does not break the law or run afoul of internal policies. It also mandates transparency so that business customers or consumers are aware of where GenAI has been utilized through the product or service creation and delivery process. It requires an organization to both understand where and how GenAI is used and then to suitably disclose it to their customers. As in the case of responsible creation, responsible usage ensures that the organization has security, privacy, compliance and intellectual property based guardrails in place.

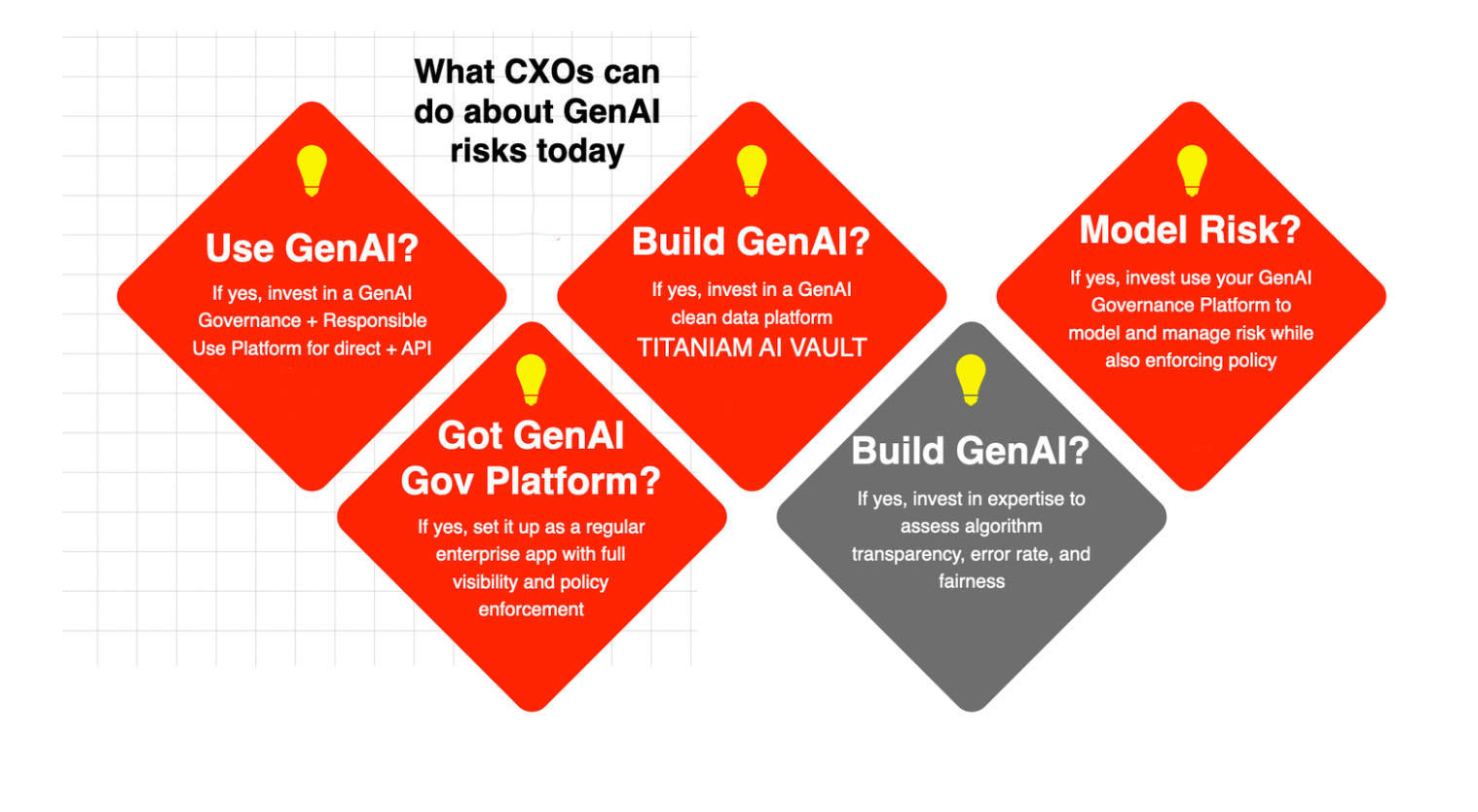

Immediate steps CXO can take towards creating and enforcing a GenAI Governance + Responsible Use Strategy

At the moment there is a ton of hype around everything we can do with GenAI and all the places we can apply it to within the enterprise. This includes security applications where GenAI can be used to improve prevention, detection as well as response. These are all powerful applications.

However, Responsible use is about making all these use cases be powered by responsible models and be used in a responsible manner. Here is a jargon-free step by step on what you can do today. These strategies can satisfy your executive team, board, legal counsel, auditors, and customers.

- Decide if you are going to utilize external AIs either directly or via APIs.

- If you answered yes, invest in a GenAI Governance Platform. This platform will provide you with a place to house and enforce policy, monitor and remediate GenAI related incidents, provide evidence of responsible use, and answer the five why’s from your board as they relate to GenAI (Who? Where? How? When? and Why?)

- This platform will also help you treat GenAI as a standard enterprise productivity tool with the associated oversight, observability, risk modeling, and risk mitigation. (Portal26 Gen Ai Governance Platform provides this).

- Decide if you are going to build GenAI models for yourself.

- If you answered yes note that AIs need to be trained on real data or data that retains all relevant attributes of real production data. For this, your data scientists need to have access to real data from which they will derive what they need to feed into the AI. This creates massive amounts of risk since not only is the data sensitive, you also need enormous amounts of it in a continuous fashion, for ongoing model accuracy.

- Reduce this exposure by investing in an AI Data Training Vault that provides strong data security (NIST FIPS 140-2 validated encryption) while retaining full featured analytics. (Portal26 AI Vault provides this)

- This platform will also generate clean and compliant AI training data pipelines that pass data that has been cleansed using granular privacy controls, into AI models (Portal26 AI Vault provides this both with and without an actual vault)

- If you answered yes to building models, you will also need to either start with a transparent AI algorithm framework or utilize AI scientists to examine the algorithms to ensure fair and error free GenAI output.

Conclusion: GenAI is here to stay. Act now to build a strong foundation

If you are not already investigating its use, start now. Consider investing in GenAI Governance + Responsible Use solutions. Attackers will get there as fast if not faster than the general population.

Security teams need to be ready with both the ability to know what is going on and also to do something about it! Portal26 can help.

To Learn more about Portal26’s GenAI Governance Offering and AI TRiSM solution please explore the below or book a demo today.

Download Our Latest Report

4 Ways Generative AI Will Impact CISOs and Their Teams

Many business and IT project teams have already launched GenAI initiatives, or will start soon. CISOs and security teams need to prepare for impacts from generative AI in four different areas:

- “Defend with” generative cybersecurity AI.

- “Attacked by” GenAI.

- Secure enterprise initiatives to “build” GenAI applications.

- Manage and monitor how the organization “consumes” GenAI.

Download this research to receive actionable recommendations for each of the four impact areas.